Hash function

Let's do some modeling - we will start with storing password problem in our Identity Management System.

First of all I want to start with a statement:

we don't want to store plaintext user passwords. We want to know that provided password is valid. Basic idea behind this concept is to create a function to transform user password to less vulnerable and irreversible hash.

At this point I think we are all aware that we should do this that way and you probably know about existence of hash functions. Choosing a proper algorithm to provide such hash is just not so simple.

We can start with some basic model and we will define some rules to our hash algorithm later on.

PasswordHashProvider - basic interface to provide one or more hash algorithms should looks like this:

public interface PasswordHashProvider {

String encode(String password, UUID salt);

default boolean verify(String password, UUID salt, String hash) {

return encode(password, salt).equals(hash);

}

}

With this simple interface we can model our requirements and implement few tests.

First of all we want our function to provide some properties like:

- it is quick to compute the hash value for any given message

- it is infeasible to generate a message from its hash

- it is infeasible to modify a message without changing the hash

- it is infeasible to find two different messages with the same hash.

To validate first property: "

it is quick to compute the hash value for any given message" we can generate multiple passwords and fail if our computations last a little bit too long. Lets write test for this:

@Timeout(value = 100, unit = TimeUnit.MILLISECONDS)

def "should generate hash during 100 milliseconds or faster"() {

given:

String simplePassword = "PASSWORD"

when:

String result = passwordHashProvider.encode(simplePassword, UUID.randomUUID())

then:

!result.isEmpty()

}

Second property of hash function - "

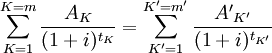

infeasible to generate a message from its hash" is quite interesting from mathematical perspective. First of all this kind of function should be irreversible (I mean there should be no function f^-1 that

f(f^-1(x)) = x ). This property is very easy to break- I mean every reversible function should be injective. To break injective easily we can assign same result for multiple values. This is the case for our password hash function - you can login with different password you defined but it should be infeasible to find the second one.

To express third property: "

infeasible to modify a message without changing the hash" I wrote simple test:

def "should generate different hash for same passwords and different salt"() {

given:

List hashes = []

Integer countSum = 0

String password = "PASSWORD"

when:

(1..ITERATIONS).each {

String hash = passwordHashProvider.encode(password, UUID.randomUUID())

hashes.add(hash)

countSum += hashes.count(hash)

}

then:

countSum == ITERATIONS

}

Ok - I will explain one trick used here to validate this property. We want to check if change of

salt will change the password hash. Salt is just some known value that is accessible during encryption of passwords to improve their quality and complicate hacker life because password rainbow tables (tables with common hash/password combinations) does not work for passwords with salt properly added during encoding.

Second trick of this test is question: how to verify that our hashes are unique? Simple idea behind this is to sum count of our hash among other hashes and after that check if our sum is equal to number of iterations. In case we provide only one hash for all values we will get sum of arithmetic progression. In our case for lets say 100 password hashes we will get sum of 5050. Therefore this sum should be from range 100 - 5050.

I can't find a way to test property "

it is infeasible to find two different messages with the same hash". We have to assume that this property should be reported by security researchers if we use one of well known algorithms.

Well known algorithms:

MD5

This algorithm fails the last property: "it is infeasible to find two different messages with the same hash". You can find details searching for phrase: "MD5 is not collision resistant".

Bcrypt

Default password hash algorithm for BSD and other systems including some Linux distributions.

Scrypt

What a silly name! What should I put in google to find java implementation? Java scrypt? :)

This algorithm characterizes with some good properties like being quite heavy memory intensive (which is good, because it saves us from farms of nvidia cores ready to break our computation intensive algorithms - as a humanity we can't scale memory without huge costs (in early 2016) ).

PBKDF2

Argon2

To sum up

Lots of algorithms to hash passwords exists but which one is best or just good enough? Of course Argon2 is quite interesting. We can define how hard it can be to cpu, memory and we can also define degree of parallelism. For now I can't find JVM implementation of this algorithm - only bindings to C lib.

PBKDF2 seems reasonable - it has java native support (javax.crypto package). It has quite interesting properties and is widely implemented. It has some troubles like being cpu rather than memory intensive (which in cloud environments is also crucial for us). Lets start with PBKDF2 and move to Argon2 someday (the day I will find the JVM implementation with Apache2 compatible licence).